Back to school: How to measure a good teacher

For most of her 11 years of teaching elementary school, Amanda Newman was considered a perfect teacher. She almost always walked out of her principal’s annual evaluations with a score of 144 out of 144, a smile on her face, and no suggestions for improvement. She knew she was good at turning out second-graders who could add, subtract, and spell – but she suspected her “perfect” evaluations masked much that she could do better.

Indeed, when her Hillsborough County, Fla., school district overhauled its teacher evaluation program two years ago, Ms. Newman was no longer considered “perfect.” But, with new specific guidelines and feedback on her teaching technique, Newman explains, she began to push the kids in ways she never would have thought second-graders capable of, and their performance soared. The mean standardized test score for reading in her second-grade class moved from the 41st percentile in 2010 to the 60th this year; in math the mean moved from the 50th to the 69th percentile.

In the past decade of national anxiety over the quality of American public education, no area in education reform has gotten more attention than teacher quality, and few reforms have encountered as much pushback as the efforts to change how to take the measure of a teacher.

Spurred by Race to the Top, the competitive Obama administration grant program, numerous states are now rushing to implement intensive teacher evaluation systems that, in most cases, are heavily influenced by test-score gains, which can affect a teacher’s employment status and pay.

Done right, say advocates, strong evaluation systems could be a game changer for both teachers and their students, reshaping the profession and pushing teachers to improve.

“How do you change results for kids if you don’t change the way we’re teaching?” asks Dan Weisberg, executive vice president at The New Teacher Project (TNTP), a teacher-training and policy nonprofit that advocates the overhaul of teacher evaluations. “If you don’t know who your best teachers are, how do you work to promote and retain them? You need to identify teachers struggling and do your best to get them to a satisfactory level or, if you can’t, get them exited. There’s no way to change results for kids without doing those things, and you can’t do those things if you don’t have a good system to accurately measure performance.”

Research indicates that the quality of teaching has more impact on student learning than any other factor that a school can control. A year with a good teacher, studies show, can mean a child learns as much as three times more than a student with a poor teacher.

But the mechanics of quantifying good teaching are tricky. How can districts discern who the best – and worst – teachers are? Are test scores reliable? Is observation too subjective? How does something as subtle and nuanced as teaching a roomful of individuals – a job that is arguably more art than science – get reduced to a score?

Most everyone agrees: There’s no single foolproof measure of a teacher. Standardized tests give one indication of what students are learning. Observations – when the observer is trained well and looking for specific details – can offer more nuance.

But how do you evaluate the essence of a teacher who inspires a true love of learning and problem solving versus one who just gets students to master certain concepts? And what about all the factors a teacher has no control over, such as family life, poverty, or a student who is having a bad day when it’s time to take a test?

There is consensus that the status quo doesn’t work. The majority of districts in the United States are like the one in which Newman found herself always “perfect,” or close to it. Principals come in for a required observation every few years, from which the teachers get little feedback, and nearly all teachers are considered good.

A landmark 2009 study of 12 districts in four states by TNTP found that more than 99 percent of teachers were rated satisfactory. The study confirmed what many in the education field already knew: Traditional “evaluations” gave very little useful feedback to teachers and their administrators.

So far, the current mantra in redesigning evaluations is “multiple measures.”

Emphasize student learning and growth, yes, but don’t judge teachers solely on standardized test scores. Make classroom observations count, and train evaluators and teachers so that both have a common understanding of the specifics the observer is looking for. Use varied measures of student learning, including, perhaps, student portfolios, internal assessments and checks, and teachers’ goals for their students. Even – crazy as it might sound – ask students about their teachers.

What’s important “is ensuring that each of those measures contributes something fairly unique and different to the picture,” says Vicki Phillips, director of the Education, College Ready United States Program for the Bill & Melinda Gates Foundation. The foundation has invested $290 million in teacher evaluation programs in three urban districts, including Hillsborough County, and five Los Angeles charter-school networks.

The long-term, ongoing Gates foundation Measures of Effective Teaching (MET) study has in recent years delivered some of the most thorough research on teacher evaluations. It found that the most important elements are detailed observations tied to a specific rubric; student achievement growth, measured, in most cases, by value-added test scores that try to isolate the teacher’s contribution to students’ scores; and student surveys rating teachers on such factors as how well they support, or challenge, or provide feedback.

“When you add them together, the corroboration becomes even stronger … and you get a much more reliable picture,” says Ms. Phillips.

Policy reform brings psychological shift

After a peer evaluator watched Newman teach three-digit addition and subtraction problems to her second-graders last year, the evaluator noted that Newman could do a better job asking her students to evaluate their own work, and suggested she do more to encourage them to reflect on and analyze their learning.

Newman was leery, especially when her assistant principal pushed her to not only incorporate “quick writes” – five-minute written reflections by students – at the end of each lesson, but to have her students themselves create a rubric to score those quick writes. After all, she pointed out, these were 7-year-olds she was working with.

“I thought he was crazy,” says Newman of her response to her boss’s explanation of a district shift to “student ownership.” In turn, he pointed out that in the new observation rubric, one whole column talks about having students take initiative.

But, Newman now admits, the results exceeded her wildest expectations and caused her to rethink ways to push her students even more. Her students sorted the quick-write cards and talked about their common characteristics, and then put those characteristics on a chart under scores of 0, 1, 2, or 3. They gave higher scores to the cards that used math vocabulary and a full explanation, and low scores when not enough support was given.

“Sometimes we underestimate what kids can do,” Newman says, noting that she’s shared the experience with other teachers, some of whom are now trying the same things. “Once I made that shift and saw what this looks like for 7-year-olds, I saw what it could look like for 6-year-olds. I told people, it’s not just pretend.”

Newman says her classroom learning culture moved from good to “incredible. And it was all based on the observation feedback we got.”

It’s not easy, though, she says, for Type A teachers used to only being praised: “The psychology of the shift we’ve undergone has been one of the hardest things for teachers.”

Last year, teacher evaluation scores of 71.5 out of 100 – what might seem like barely a C grade to most teachers – actually put teachers in the top 11 percent, says Newman, but it was tough for them to initially see that.

Many teachers in Hillsborough County are, like Newman, coming to view the evidence-based feedback as a gift, not a threat. And it helps that the district involved teachers in the planning process from the beginning, and got the local union to sign off on it.

While the system, currently in its second year, is still being tweaked, multiple-measure teacher evaluation in Hillsborough County breaks down this way: 40 percent on student achievement, determined by standardized test scores; 30 percent on principal observation; and 30 percent on a peer evaluator observation. (Next year, the principal’s observations will count more.)

Eventually, the scores will affect pay, except for some veteran teachers who choose not to opt in to the new pay scale.

The district trained hundreds of accomplished teachers to be peer evaluators and mentors, working as coaches for relatively new teachers while observing and evaluating other teachers in the district.

Poor reform may mean revolt

Newman’s enthusiastic response stands in marked contrast to those of teachers in some other districts, where they are in revolt over new evaluation measures.

Take the case of the “outing” of New York City’s “worst teacher,” splashed in the tabloid New York Post last February. It was an extreme example of how evaluation reform can spread fear and loathing among teachers.

The city’s school system developed a complex formula that predicts how a teacher’s students should perform on exams each year, and then ranks them against students of other teachers.

The scores, in theory, are for internal use, but this year the city released them to the media. The Post crowned a sixth-grade English as a second language teacher the worst: Even though the city says there is a margin of error of 35 percentiles in math and 53 percentiles in language arts, and even though her principal and colleagues agree she’s excellent, the scores showed glaring anomalies.

Misidentifying the worst – or best – teachers, and improperly firing or rewarding them is just the most glaring of the unintended consequences from a poorly designed evaluation system, say some education experts. Other risks include pushing teachers to teach to a flawed test, or pitting teachers against each other.

Small details in how the systems are rolled out make a big difference in the districts and states that have undertaken overhauls of teacher evaluation. Involving teachers in a meaningful way in the design, for instance, is key, as are good communication and shifting resources to fund the new plan.

In some areas, teachers and principals are up in arms over what they see as an attack on the teaching profession and faulty scoring systems.

The push for better evaluation systems “is the fastest and most controversial reform we have right now,” says Elena Silva, a former senior policy analyst at Education Sector, a policy nonprofit. While Ms. Silva agrees that meaningful evaluations are necessary, she’s not sure that the rapid push – or the focus on ousting the worst performers – has been helpful.

“The movement around teacher evaluation reform was pushed fairly boldly and quickly around the accountability issue,” Silva says, “And that was a mistake. It left teachers behind and didn’t attend to the vulnerabilities and insecurities teachers feel.”

Instead of just focusing on how to punish or reward the worst or best 5 percent of teachers, she notes, “the larger solution lies with the 90 percent [in the middle], and helping that 90 percent improve and creating the conditions necessary for them to do their job well.”

Testing is a blunt instrument

Most agree student learning should be at the heart of teacher evaluations – but what happens when the tests are flawed?

When scoring math tests this year, teachers were constantly calling the testing company over mistakes on the test, says Katie Zahedi, principal of Linden Avenue Middle School in Red Hook, N.Y. On a few questions, her teachers differed strongly with Pearson, the testing company, on how the answers should be scored.

Nancy Keeney, an English and reading teacher at Linden Avenue, says that the tests have also started to affect how she teaches – for the worse. The reading test, for instance, asks students to read a poem or short excerpt and then dissect the various elements into a “graphic organizer.”

“This isn’t something English teachers would teach if it weren’t on the test,” Ms. Keeney says, “but now you have to teach kids how to do that…. I have never seen any kid sit up straighter and spark to life when going over graphic organizers and learning how to identify correct answers.”

A recipient of Race to the Top funds, New York has been working to roll out an evaluation system – which local unions need to sign on to, district by district – required by the Race to the Top rules. The result has been a complicated system that few understand and almost no one seems happy with. Many districts still haven’t come to an agreement with their unions, and as of this spring, nearly 1,500 New York principals – a third of all public school principals in the state – had signed an open letter of concern about the state’s approach.

Meanwhile, the state has been cited by the federal government for its failure to follow through on the promises it made in its application, particularly relating to putting the new evaluation system in place.

In their letter, the New York principals cite a number of concerns, but the big one comes down to test scores, and the value-added model that, in theory, isolates the teacher’s contribution to a student’s improvement on standardized tests.

In New York, other measures, including observations, also factor into evaluations, but the state has determined that no teacher who is deemed “ineffective” based on student test scores can be deemed “effective” overall – even though student scores account, in many districts, for only 20 percent of a teacher evaluation. The trouble, say critics, is that value-added scores are highly flawed and particularly unstable with certain groups of students, including English-language learners, special-education students, and gifted students.

“I went from being very enthusiastic about these methods to extremely worried,” says Linda Darling-Hammond, a Stanford education professor who has researched teacher evaluation extensively and says she’s seen value-added scores bounce wildly around even when she and other researchers believed they’d controlled for all student characteristics.

A few small anomalies, she says, can make a big difference in how a teacher appears to be doing.

Ms. Darling-Hammond cites a New York City teacher whose scores showed her to be the worst eighth-grade math teacher in the city, even though her kids – part of a gifted and talented class – all passed the state’s Regents exam for integrated algebra, a test they normally wouldn’t take until late in high school. The students appeared to have moved far beyond the material they were tested on.

“If you had a basket of evidence, you would have seen that she got the eighth-graders to pass the Regents, seen that the scores were high. You might have also seen classroom evidence. You would have said ‘this teacher is kicking it out,’ ” says Darling-Hammond. “The issue is not, ‘Do we look at student learning?’ The issue is, ‘How do you look at the data?’ ”

But others believe that, even while flawed, value-added data is still in many cases the best way to look at a teacher’s impact on student learning – so long as it’s combined with other tools.

“There are a million good, legitimate concerns, but there’s also a philosophical element … which is being willing to be judged by something that’s truly objective,” says Mr. Weisberg at TNTP.

“We don’t have the same sort of debates about whether we’ve squeezed all the potential for unfairness out of evaluation systems for surgeons or engineers,” Weisberg says. “How long do you want to wait until we have a system that satisfies all the concerns?”

Phillips, at the Gates foundation, notes that in her organization’s study, value-added scores tend to correlate highly with other, more nuanced assessments of teaching quality.

Value-added “is volatile, but not so volatile that it’s not predictive,” says Phillips. “But some assessments it’s based on aren’t as great as you’d like, either. It’s why you want multiple measures.”

The nuance of multiple measures

Student test scores are far less controversial when they’re not the only piece of evidence being used in an evaluation – or when administrators have some discretion to interpret those scores.

That’s the case at the Achievement First (AF) schools, a growing network of 20 charter schools in New York and Connecticut that has one of the most comprehensive, and harmonious, evaluation systems around – all initiated by teachers. While test scores are key (they count for 40 percent of the teacher’s overall evaluation in cases where a standardized state test exists), school leaders understand their limitations and can use professional judgment to weigh those scores.

And the test scores are just one piece of a system that also includes detailed observations from in-school and out-of-school evaluators, and surveys of students, peers, administrators, and parents. Every teacher is assigned a coach, who helps them improve and hone their craft in weekly or biweekly meetings.

“We have a culture of continuous improvement, and teachers are intent on getting better,” says Kate Baker, the principal at AF Bridgeport Academy Elementary School in Connecticut.

This spring, the end of his first year teaching first grade at AF Bridgeport, Ted Eckert received an 11-page evaluation summary.

It included the results of surveys from his students’ families, fellow teachers, administrators, and four observation scores: three formal ones, and a fourth score based on frequent informal observations. In the fall, he’ll get his students’ test scores.

“It was a really complete picture of not only me as an instructor and teacher, but as a professional on a staff with lots of moving parts,” says Mr. Eckert. In his five previous years teaching in the Bronx and Queens, he says he got “nothing even close to as systematized as I experienced this year.”

AF’s evaluation system began several years ago, when teachers started asking for more support to help them improve, and also for a career path that could allow them to stay in the classroom while still moving up into leadership positions. Teachers met with school leaders, and together they came up with the current system.

“We knew what we included would help drive teacher behaviors, so we wanted to make sure the right stuff was in there,” says Sarah Coon, AF’s senior director of talent development.

In AF’s model, teachers are grouped into five “buckets” of experience, from Stage 1 (“intern”) through Stage 5 (“master teacher”). As they move up, they have the chance for increased recognition, an increased salary scale, and more leadership and professional development opportunities.

A master teacher has control over a $2,500 professional development budget, can serve as a coach and get extra coaching sessions from experts, and works with a teaching and learning team on things like curriculum development.

“Had we done this without teachers’ full involvement, we might have come up with different rewards,” says Ms. Coon.

This was the first year, and so far, teachers seem to be relishing the feedback.

“The rubric is so specific that you can understand where the scores are coming from,” says Katie Maro, a third-grade teacher at AF Crown Heights Elementary School in Brooklyn, N.Y. Ms. Maro says she understands why so many teachers are resistant to an evaluation that is heavy on test scores. But given that these scores come in a rich context, with so many other factors, she says she feels fine with that being a piece of it.

“AF has taken a lot of time to see that I don’t see myself as a number,” she says.

Even more important, say other AF teachers, they have supports that help them use the feedback to improve.

“The times I’ve been most successful with helping teachers develop are when we identify growth areas in observation and then work on them in weekly coaching,” says Ms. Baker, the Bridgeport principal, noting that she – like everyone – has a coach she works with. “It’s hard to make forward momentum with just a few observations. It needs to be tied to an ongoing practice of coaching and development.”

What to do with the measurement

Measuring teaching only means something if those results are put to good use: to identify – and possibly remove – the very worst teachers, if necessary, and to support teachers and help them get better.

“Can we improve struggling teachers? It’s one of the things we don’t know,” says Weisberg. Good evaluation systems, he hopes, can help districts target their professional development wisely to help those teachers.

But, he says, when teachers don’t improve they need to leave the classroom.

Likewise, many reformers feel there is untapped potential for the best teachers. They could reach more students through larger classes or smart use of technology. Or schools can give them leadership and mentoring opportunities to help fellow teachers. These possibilities could have the added benefit of attracting talented teachers to the profession, by creating new career ladders and offering more rewards, say advocates.

“Where quality systems are going in place, even teachers who are heavily skeptical and worried about it to begin with … say, ‘This helped me change my practice, it helped me improve,” says Phillips.

Evidence of that is mounting in Hillsborough County, where first- and second-year teachers are now assigned a mentor.

Aimee Ballans says she watched newer teachers there make big leaps this past year when she could tie her coaching to the specific observation rubric that’s part of the new system.

In one case, she helped a high school reading teacher who was struggling with a group of boys in her last class of the day. The teacher was reluctant, but Ms. Ballans pushed her to try a form of structured debate in which the kids got to get up, move around, and switch sides in the debate.

“At the end of the class period, we had every kid participating, and the kids said, ‘We thought we were just going to come in here and read today, but this was awesome,’ ” Ballans recalls. “We had a great conversation afterwards where we really dug down into why this worked.”

Helping that rookie second-year teacher see that the students’ previous disengagement wasn’t their fault was “the best gift I could give her.”

Stay in the Know

Sign up for updates on our latest research, insights, and high-impact work.

"*" indicates required fields

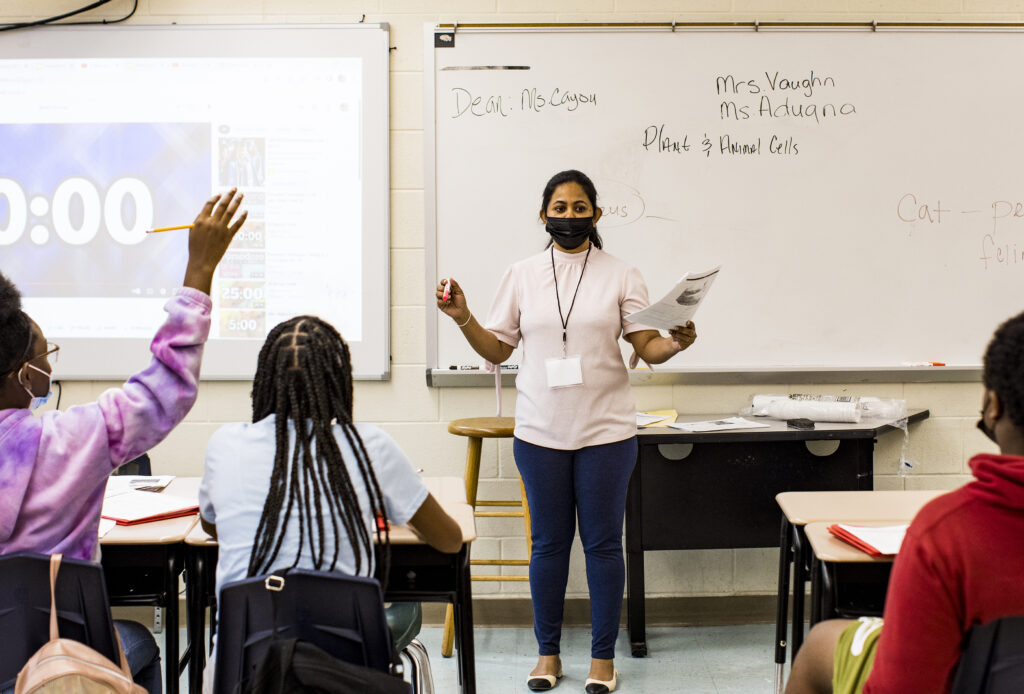

Imali Ariyarathne, seventh-grade teacher at Langston Hughes Academy, introduces her students to the captivating world of science.

About TNTP

TNTP is the nation’s leading research, policy, and consulting organization dedicated to transforming America’s public education system so that every young person thrives.

Today, we work side-by-side with educators, system leaders, and communities across 39 states and over 6,000 districts nationwide to reach ambitious goals for student success.

Yet the possibilities we imagine push far beyond the walls of school and the education field alone. We are catalyzing a movement across sectors to create multiple pathways for young people to achieve academic, economic, and social mobility.